#Apache airflow operators password

Make cloud-airflow # this command will forward Airflow port from EC2 to your machine and opens it in the browser # the user name and password are both airflow # Wait until the EC2 instance is initialized, you can check this via your AWS UI # See "Status Check" on the EC2 console, it should be "2/2 checks passed" before proceeding # Wait another 5 mins, Airflow takes a while to start up Make infra-up # type in yes after verifying the changes TF will make # Create Redshift Spectrum tables (tables with data in S3) Make tf-init # Only needed on your first terraform run (or if you add new providers) tests # Create AWS services with Terraform Make ci # Runs auto formatting, lint checks, & all the test files under. Make up # start the docker containers on your computer & runs migrations under. # Clone and cd into the project directory. Run these commands to setup your project locally and on the cloud. Read this post, for information on setting up CI/CD, DB migrations, IAC(terraform), “make” commands and automated testing. With at least 4GB of RAM and Docker Compose We will use metabase to visualize our data.

#Apache airflow operators movie

Joining the classified movie review data and user purchase data to get user behavior metric data.Extracting user purchase data from an OLTP database and loading it into the data warehouse.Loading the classified movie reviews into the data warehouse.Classifying movie reviews with Apache Spark.We will be using Airflow to orchestrate the following tasks: movie_review.csv: Data sent every day by an external data vendor.user_purchase: OLTP table with user purchase information.The user_behavior_metric table is an OLAP table, meant to be used by analysts, dashboard software, etc. We are tasked with building a data pipeline to populate the user_behavior_metric table. Let’s assume that you work for a user behavior analytics company that collects user data and creates a user profile. If you are interested in a local only data engineering project, checkout this post If you are interested in a stream processing project, please check out Data Engineering Project for Beginners - Stream Edition

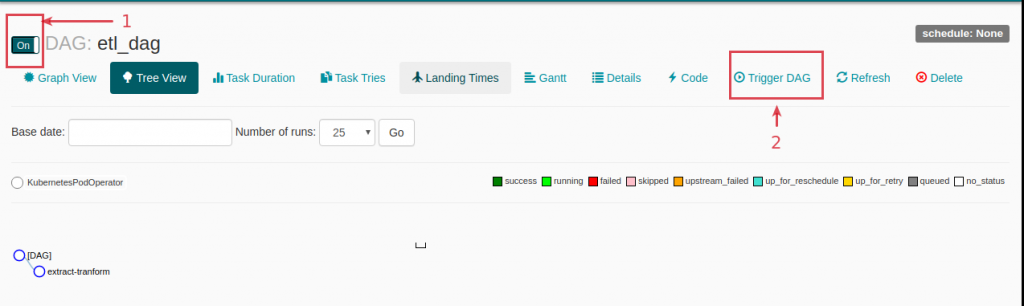

#Apache airflow operators how to

Learn how to design and build a data pipeline from business requirements. Learn how to spot failure points in data pipelines and build systems resistant to failures. Set up Apache Airflow, AWS EMR, AWS Redshift, AWS Spectrum, and AWS S3. Looking for a good project to get data engineering experience for job interviews. Looking for an end-to-end data engineering project. Wanting to work on a data engineering project that simulates a real-life project. If you areĪ data analyst, student, scientist, or engineer looking to gain data engineering experience, but are unable to find a good starter project. Setting up a data engineering project, while conforming to best practices can be extremely time-consuming. 5.2 Loading classified movie review data into the data warehouseĪ real data engineering project usually involves multiple components.5.1 Loading user purchase data into the data warehouse.The named parameters will take precedence and override the top level json keys. In the case where both the json parameter AND the named parametersĪre provided, they will be merged together. Pipeline_task - may refer to either a pipeline_id or pipeline_name * existing_cluster_id - ID for existing cluster on which to run this task * new_cluster - specs for a new cluster on which this task will be run Pipeline_task - parameters needed to run a Delta Live Tables pipelineĭbt_task - parameters needed to run a dbt projectĬluster specification - it should be one of: Spark_submit_task - parameters needed to run a spark-submit command Spark_python_task - python file path and parameters to run the python file with Notebook_task - notebook path and parameters for the task

Spark_jar_task - main class and parameters for the JAR task Task specification - it should be one of: When using named parameters you must to specify following: One named parameter for each top level parameter in the runs/submit endpoint. The second way to accomplish the same thing is to use the named parameters of the DatabricksSubmitRunOperator directly. Json = notebook_run = DatabricksSubmitRunOperator ( task_id = "notebook_run", json = json )

0 kommentar(er)

0 kommentar(er)